New research from the Oxford Internet Institute at the University of Oxford, and the University of Kentucky, finds that ChatGPT systematically favours wealthier, Western regions in response to questions ranging from 'Where are people more beautiful?' to 'Which country is safer?' - mirroring long-standing biases in the data they ingest.

Kagi had a good little example of language biases in LLMs.

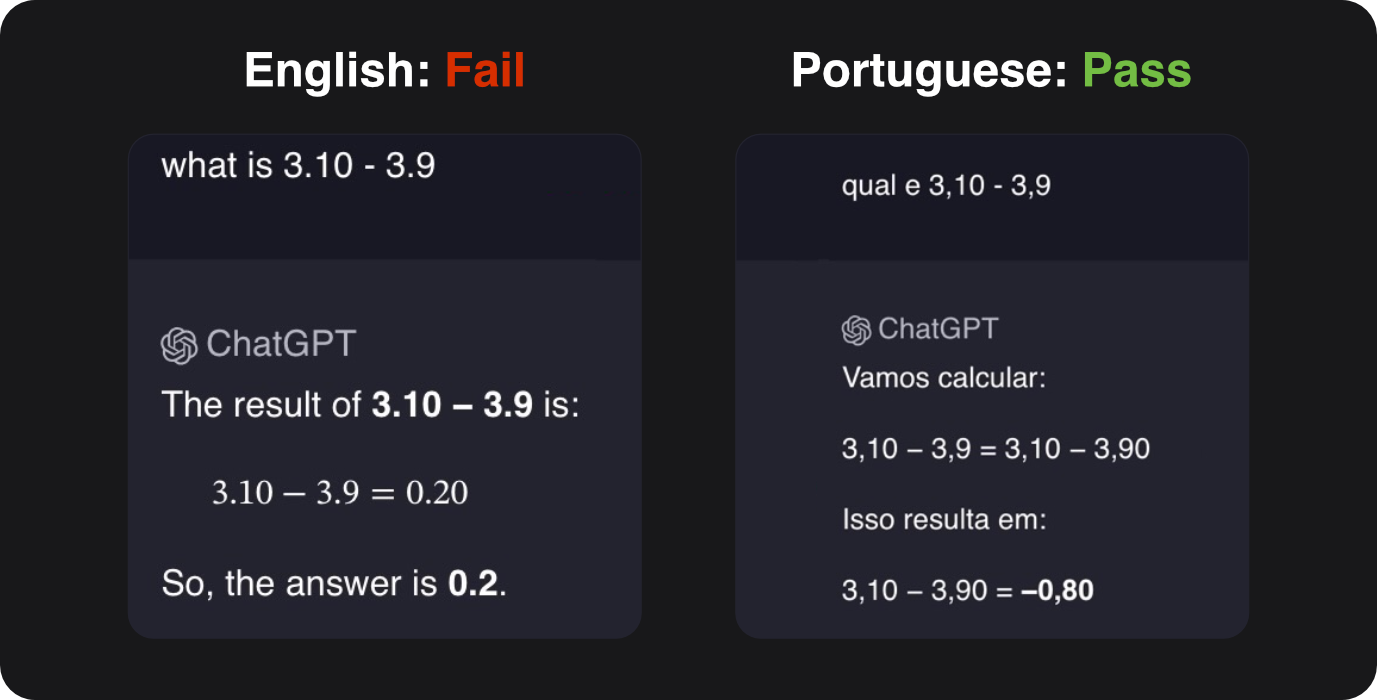

When asked what 3.10 - 3.9 is in english, it fails, but it succeeds in Portuguese, if you format the numbers as you would in Portuguese, with commas instead of periods.

This is because… 3.10 and 3.9 often appear in the context of python version numbers, and the model gets confused, assuming there is a 0.2 difference going from version 3.9 to 3.10 instead of properly doing math.

Kagi had a good little example of language biases in LLMs.

When asked what 3.10 - 3.9 is in english, it fails, but it succeeds in Portuguese, if you format the numbers as you would in Portuguese, with commas instead of periods.

This is because… 3.10 and 3.9 often appear in the context of python version numbers, and the model gets confused, assuming there is a 0.2 difference going from version 3.9 to 3.10 instead of properly doing math.

deleted by creator