- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

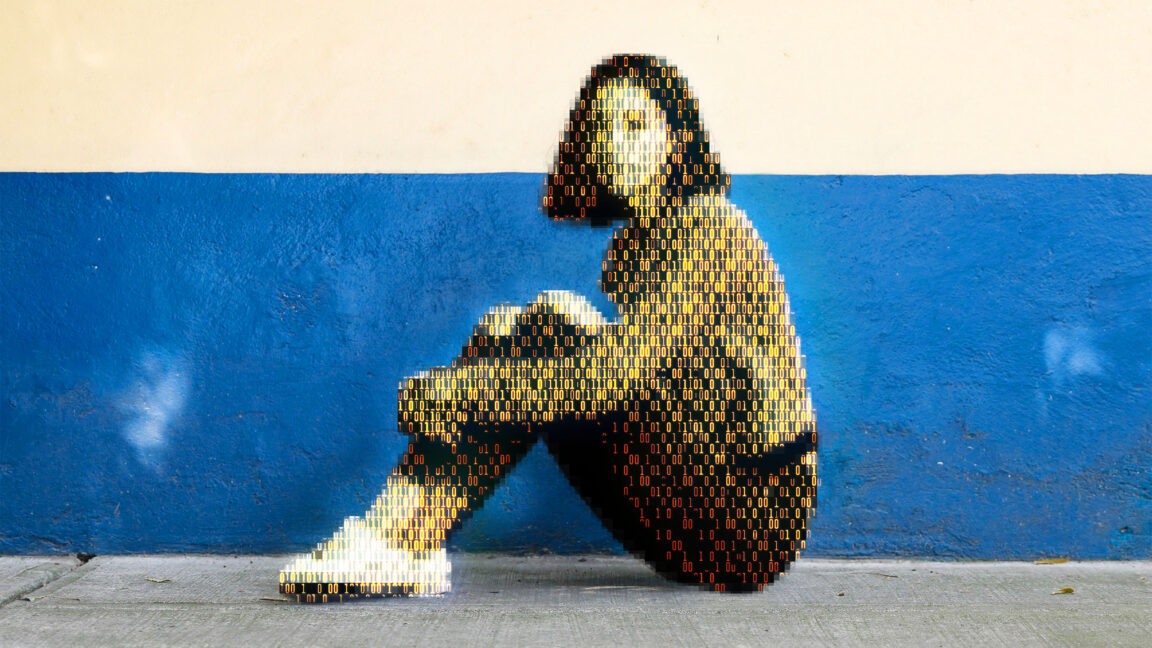

Today, a prominent child safety organization, Thorn, in partnership with a leading cloud-based AI solutions provider, Hive, announced the release of an AI model designed to flag unknown CSAM at upload. It’s the earliest AI technology striving to expose unreported CSAM at scale.

It differs in basically being something completely different. This is a classification model, doesn’t have generative capabilities. Even if you were to get the model and it’s weights, and you tried to reverse engineer an “input” that it would classify as CP, it would most likely look like pure noise to you.

Moron

Generate porn, classificate output, result very young looking models.

Moron

So you need to have a model that generates CP to begin with. Flawless reasoning there.

Look, it’s clear you have no clue what you’re talking about. Stop demonstrating it, moron.

Not CP, but normal porn and select on CP traits, moron

https://en.m.wikipedia.org/wiki/False_positives_and_false_negatives

Not that I think you will understand. I’m posting this mostly for those moronic enough to read your comments and think “that seems reasonable”

Thanks

Alright, I found the name of what I was thinking of that sounds similar to what they’re suggesting: generative adversarial network (GAN).

Applying GAN won’t work. If used for filtering would result on results being skewed to a younger, but it won’t show 9 the body of a 9 year old unless the model could do that from the beginning.

If used to “tune” the original model, it will result on massive hallucination and aberrations that can result in false positives.

In both cases, decent results will be rare and time consuming. Anybody with the dedication to attempt this already has pictures and can build their own model.

Source: I’m a data scientist

At least it’s not “Source: I am a pedophile” lol

The model I use (I forget the name) popped out something pretty sus once. I wouldn’t describe it as CP, but it was definitely weird enough to really make me uncomfortable. It’s the only thing it ever made that I immediately deleted and removed from the recycling bin too lol.

The point I’m making is that this isn’t as far fetched as you believe.

Plus, you can merge models. Get a general purpose model that knows what children look like, a general purpose pornographic model, merge them, then start generating and selecting images based on Thorn’s classifier.

You can’t merge a generative model and a classification model. You can run then in series to get a bunch of false positives/hallucinations, but you can’t make it generate something from the other model.

When I said a “general purpose model that knows what children look like” I didn’t mean the classification model from the article. I meant a normal, general purpose image generation model. When I said “that knows what children look like” I mean part of its training set is on children, because it’s sort of trained a little on everything. When I said “pornographic model” I mean a model trained exclusively on NSFW content (and not including any CSAM, but that may be generous depending on how much care was out into the model’s creation).