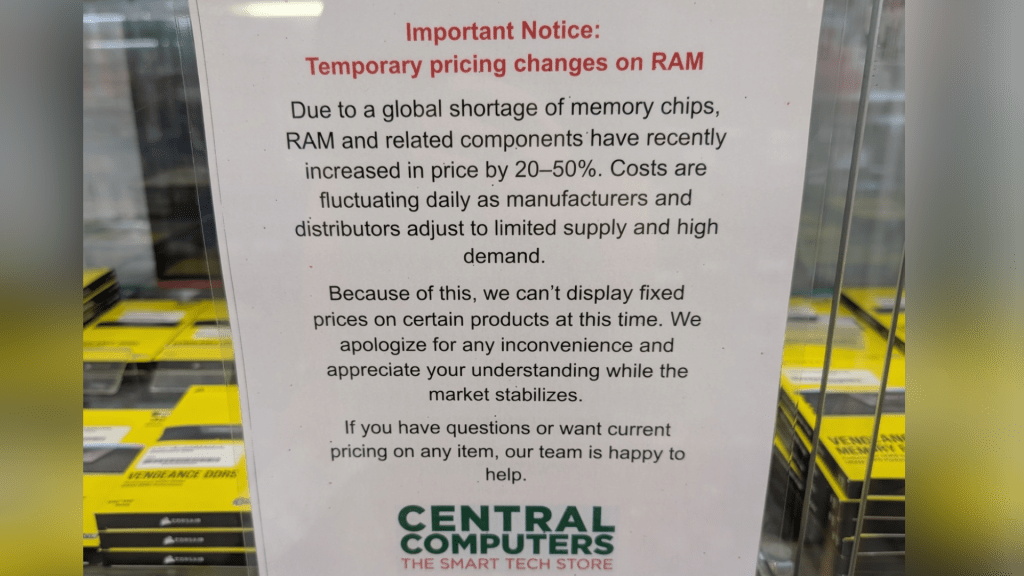

Generative “AI” data centers are gobbling up trillions of dollars in capital, not to mention heating up the planet like a microwave. As a result there’s a capacity crunch on memory production, shooting the prices for RAM sky high, over 100 percent in the last few months alone. Multiple stores are tired of adjusting the prices day to day, and won’t even display them. You find out how much it costs at checkout.

Yeah, even less so with gen AI though I think.

Machine learning models have much different needs that crypto. Both run well on gaming GPUs and both run even better on much higher end GPUs, but ultimately machine learning models really really need fast memory because it loads the entire weights into graphics memory for processing. There’s some tools which will push it to system memory but these models are latency sensitive so crossing the CPU bus to pass 10s of gigabytes of data between the GPU and system memory is too much latency.

Machine learning also has the aspect of training vs inference, where the training portion will take a long time, will take less time with more/faster compute and you simply can’t do anything with the model while it’s training, meanwhile inference is still compute heavy it doesn’t require anywhere near as much as the training phase. So organizations will typically rent as much hardware as possible for the training phase to try to get the model running as quickly as possible so they can move on to making money as quickly as possible.

In terms of GPU availability this means they’re going to target high end GPUs, such as packing AI developer stations full of 4090s and whatever the heck Nvidia replaced the Tesla series with. Some of the new SOCs which have shared system/vram such as AMD’s and Apple’s new SOCs also fill a niche for AI developer and AI enthusiasts too since that enables large amounts of high speed video memory for relatively low cost. Realistically the biggest impact that AI is having on the Gaming GPU space is it’s changing the calculation that AMD, Nvidia and Intel are making when planning out their SKUs, so they’re likely being stingy on GPU memory specs for lower end GPUs to try to push anyone with specific AI models they’re looking to run to much more expensive GPUs