Clear and wave are the only real glass bricks, the rest is mental illnesses

- 5 Posts

- 238 Comments

4·11 days ago

4·11 days agoThis is something out of Disco Elysium

18·13 days ago

18·13 days agoHe didn’t, I am saying it right now. I’m propagandating (propagating? Propaganding?) on behalf of my anti-statistics agenda. Calling it a capitalist tool of misinformation is a statement targeted to this audience. Calling for deportation of statisticians is to add graphicity and strength to my statement. The quotes make it look like someone’s quote.

You see, I’m trying to pick up this piece of advice for myself

Noooo, Mickey! You fell for the AI

112·13 days ago

112·13 days ago“Statistics is how the capitalist manufacture their lies. We should abolish it and deport all statisticians”

The day after it’s fine. The next day it’s meh. Provided you keep it in a paper bag and not out in the air

The 0.62€ industrial baguette I buy at Despar Is fine and not dry despite being industrial

Plain bread is perfectly fine as long as it’s not one of those super dry breads

5·18 days ago

5·18 days agoShe looks like no shape a human body would produce

2·19 days ago

2·19 days agoYou know, Hideki Konno predicted all this

I only did paintball once, and that place didn’t use gelatin balls, I think they were made of a thin bioplastic film (corn stuff) filled with ink

The one on the right is hotter

This looks like a success to me. Just imagine this: replace the thicket with the enemy army, you fire one shot and the rest of the army retreats.

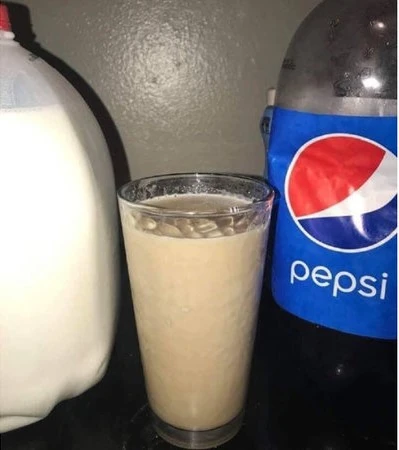

Pepsi and milk

🫣

Wait, how old is this guy? 🤨

Also, this doesn’t answer how awkward was the shittalk

8·1 month ago

8·1 month agoIt should make a cartoonishly “sproioioioing”

Yes, do

I’m mostly curious about how awkward the talking part was, and if the 200£ were real

Well, did you?

Things like this remind me of Terry Davis, who wrote a random words generator program and (due to schizophrenia) believed it was God speaking.

https://youtu.be/xWQg30P866A